In the 2006 science fiction film I, Robot, based on the classic novel by Isaac Asimov, AI plays a major role in the story. The film is set in 2030, and it’s the sort of future we’ve imagined in various stories, movies, and TV shows: there are totally autonomous cars, skyscrapers so tall you can’t see their peaks, and robotics has advanced to such a point that every household has a personal robot to assist with cooking, cleaning, and virtually anything else you could want it to do — including being your friend. This is the central dilemma of the main character, the Will Smith-portrayed Del Spooner, who befriends a robot named Sonny as they try to solve the murder of a roboticist and in the midst of all this, as often happens in action-thrillers, also uncover a far-reaching conspiracy.

While the tête-à-tête between the two leads in some instances takes on the flavor of a buddy comedy, the juxtaposition of the two characters and the way they interact — the tension between them — tells us a lot about the current “AI question.” Spooner doesn’t trust robots, and sees them as humanity’s downfall; Sonny admires humanity, and only wants to be more like it, and doesn’t understand why he can’t be. The dialogue and ideas presented in the film stimulate the mind to consider other things, too: Can robots and humans work in harmony? Can they ever be the same? Are they actually different at all? Or are humans just advanced computers?

Watching the film now, it’s easy to laugh at how futuristic things look compared to how they actually are. Similar to watching old episodes of the Jetsons, it’s clear that as humans we tend to be very optimistic about our rate of progress. But with the recent developments in AI and machine-learning, are we really that far off?

Most recently, a software called ChatGPT has been dominating the conversation of AI, and its language generation models have proven to be incredibly effective at a number of varied tasks. In a way, it does seem like the future, and its ability to complete what at one time seemed indelibly human tasks can feel uncanny. You can ask it to write blogs (I promise this one was written by a human), cover letters, or even come up with ideas for where to eat that night — and they all seem quite “real,” authentic, and like they could’ve come from a human. Granted, this language generation model is not the only prominent AI software, and machine learning has been a feature of many new tools, especially in those used by business.

Throughout all of the discussion of AI, since its introduction in the 1950’s, there has been the primary concern over humans losing their jobs, and how it might change human relationships to work as a whole. The thinking goes: if we teach robots how to do everything, would we eventually need humans at all? If it were possible to program an

artificial intelligence so that it would be indistinguishable from so-called “real” humans (another movie, Blade Runner, tackles this question excellently), would the distinction between human and AI become meaningless? If that thought sent a shudder down your spine, you’re not alone. It is an anxiety we all share, rooted in a fear of obsolescence; humans tirelessly build machines to help them accomplish their goals of dominion over the natural world, but they fear machines that might take dominion over them. It is understandable — given that humans are prone to thinking they’re the most important creatures on the planet — and in fact, this has been a concern since the Industrial Revolution, going as far back as William Blake’s “satanic mills” in the early 19th century.

All this to say that technological advancements, and our fears that they will supersede our sense of humanity, have been around a long time. It’s only now that those questions take on a new weight, and beg to be examined in the light of the present day.

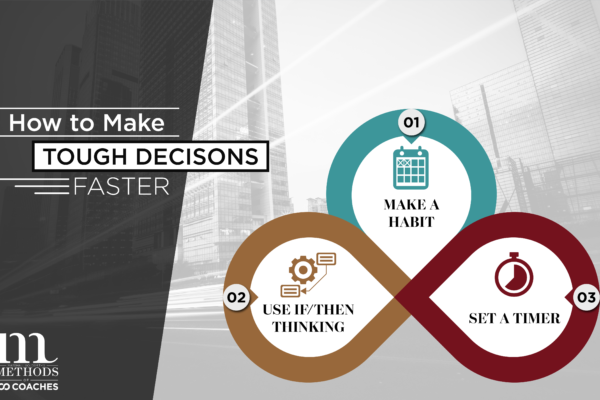

One of the ways in which AI is being most critically examined is its potential in the business and management world. Some experts have advanced theories that AI-assisted leadership will reduce time spent on “mundane” or “rote” tasks such as routine emails (that tend to follow a template) and face-level check-ins (such as: “How close to being done with the project are you?”). In these applications, the primary benefit is speed and efficiency, with automations saving precious time for leaders and managers, but the catch is this: AI can only complete these tasks which are pre-determined, repeatable, predictable, and part of what might be deemed “general intelligence.” It cannot perform the complex and creative tasks that require creative, complex problem solving, and novelty. Undergirding this is this notion of Moravec’s paradox, which states that what is easy for humans to do is hard for a computer to do, and vice-versa. In a word, this means that behaviors and activities typically considered management might very well be able to be taken over by AI, whereas the more nebulous realms of leadership will, at least for the time being, be reserved for humans. This is because much of what entails management has to do with maintaining a status quo, controlling the chaos and avoiding change, and keeping things operational based on pre-existing protocols; you aren’t necessarily solving complex inter-personal issues or trying to drive the business forward with a new vision. Management may look like sending out regular “check-in” messages at predetermined intervals, or responding with simple “yes/no” answers, and going through various checklists; we’re not far off from AI accomplishing just these things.

Within “leadership,” more of what are known as the soft skills are utilized, and it requires a nimble approach to problem-solving, as well as strength in dealing with people. Soft skills are those things that are hard to pin down, and refer generally to a large set of behaviors and intuitions that people use to communicate effectively and civilly with each other. When there’s an inter-personal conflict between two team members, leadership is needed to understand the nuance of the argument — as well as the nuance of the quarreling employees’ personalities — in order to put forth a solution that makes every party happy. Often in these situations it is not a cut and dry issue; tensions can arise from years of work history and escalating resentments that don’t necessarily have any “rationale” or “logic” attached to them. And that’s just the thing: humans are emotional, unpredictable, often rash in their decisions when in compromised emotional states. No matter how powerful our predictive modeling, humans will always find new ways to behave that cannot always be mapped. Because of this, AI isn’t likely to advance to the point of standing in completely for a leader, at least not for a long time. While “hard” processes, such as those in the manufacturing of a physical product, have long gone the way of automation and continue to do so, the “soft” skills will still be a priority. Because they are what make us human, and despite all of our technology, we still live in a world made by and largely for humans.

As we see time and time again, the future of leadership is about investing in soft skills, and becoming more human, not less; more organic, less mechanic. Leaders will need to not only understand the technologies they use but the people involved with using them. To do this, on-demand training like Methods can be a great solution. As long as humans are around, there will be emotion and illogic, and we will need humans to understand and deal with these things.

Sources:

https://knowledge.wharton.upenn.edu/article/artificial-intelligence-will-change-think-leadership/

https://www.peoplemanagement.co.uk/article/1815587/ai-will-change-leadership#:~:text=As%20it%20is%20likely%20that,something%20AI%20can’t%20match

https://www.forbes.com/sites/forbestechcouncil/2022/10/06/how-will-ai-technology-change-leadership-in-the-future/?sh=496fdabe5d4f